The Newton-Raphson algorithm is used to obtain the optimal parameters of the regression model

Contents

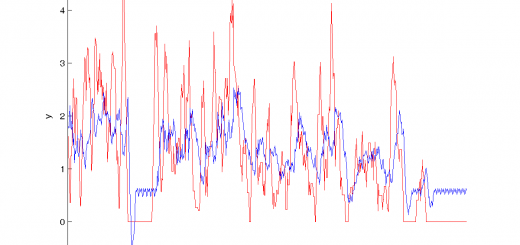

Create a demonstration data set

% independent variable, 20 samples x = [[-8:1]'; [2:11]']; % dependent variable of zeros and ones y = [zeros(9,1); 1; 0; ones(9,1)]; % construct the matrix of independent samples X = [ones(size(x,1),1) x]; %Plot the initial data h = figure; hold on plot(X(:,2), y,'k*'); hold on txtlegend = {'initial data'}; colors = {'r-','g-', 'b-', 'c-', 'm-', 'y-'}; ncolor = 0;

Set the constant for iteration convergence

% the algorithm stops when the difference of the parameter is small

TolFun = 10^-3;

Define the initial value of the parameters

% 1st element, function of the mean value of y's b0 = log(mean(y)/(1-mean(y))); % column-vector of parameters b = [b0 zeros(size(X,2)-1)]';

The Newton-Raphson procedure

while 1==1 % the logit^-1 variable is function of parameters z = X*b; % recover the regression p = 1./(1+exp(-z)); % calculate the weights of the samples w = p.*(1-p); % calculate the dependent variable for this step of least squares u = z + (y-p)./w; % plot the results of this step plot(x, p, colors{mod(ncolor,length(colors))+1}); ncolor = ncolor + 1; % change color txtlegend{end+1} = ['iteration ', num2str(ncolor)]; % store old parameters b_old = b; % calculate new parameters with least squares b = inv( X'*diag(w)*X ) * X' * diag(w) * u; % termanate the iterations if changes of the parameter are small if sumsqr(b - b_old) <= TolFun, break; end end

Show the result

% claculate recovered dependent variable p = 1./(1+exp(-X*b)); % plot the result txtlegend{end+1} = 'recovered data'; plot(x, p,'rs'); legend(txtlegend); axis tight xlabel('x'); ylabel('p = (1+e^z)^{-1}, x = b_0+b_1x');

Notes

In Matlab there are glmfit and glmval functions. Use them for professional purposes.

return